Test Equating Based on Classical Test Theory (CTT)

Equivalent (Random) Groups

In an equivalent group design, a random sample of examinees takes form X and a second random sample takes form Y. Because these are random samples from the same population, any differences in the distribution of scores are again assumed to be a function of test difficulty because the randomization should ensure that the two groups are equivalent in the ability being assessed.

Example Setup

First, we will install and activate the equate package.

install.packages("equate")

library("equate")Next, we will import the test forms (X and Y) into R. Each form has 1000 students taking either form X or form Y. Scores in both forms range from 20 to 50 points. In this example, assume that X is the new test form and Y is the reference test form. We want to place scores from form X into the scale of form Y.

# Test form X

formx <- read.csv("formx.csv", header = TRUE)

formy <- read.csv("formy.csv", header = TRUE)

# Preview the data sets

head(formx)## score form

## 1 35 x

## 2 34 x

## 3 32 x

## 4 35 x

## 5 26 x

## 6 47 xhead(formy)## score form

## 1 43 y

## 2 34 y

## 3 31 y

## 4 38 y

## 5 37 y

## 6 42 y# Combine the forms

formxy <- rbind(formx, formy)The equate package analyzes score distributions as

frequency tables. These frequency tables are created using the

as.freqtab function in equate. So, our

data must be restructured as a frequency table in order to work with the

functions in equate.

# Add score frequencies to the data

data <- as.data.frame(table(formxy$score, formxy$form))

names(data) <- c("total", "form", "count")

head(data)## total form count

## 1 23 x 2

## 2 24 x 6

## 3 25 x 5

## 4 26 x 14

## 5 27 x 7

## 6 28 x 20# Restructure the data as a frequency table

data_x <- as.freqtab(data[data$form == "x", c("total", "count")], scales = 20:50)

data_y <- as.freqtab(data[data$form == "y", c("total", "count")], scales = 20:50)

head(data_x)## total count

## 1 20 0

## 2 21 0

## 3 22 0

## 4 23 2

## 5 24 6

## 6 25 5head(data_y)## total count

## 1 20 0

## 2 21 0

## 3 22 0

## 4 23 0

## 5 24 0

## 6 25 0Now let’s see a descriptive summary of the forms.

# Descriptive summary of the forms

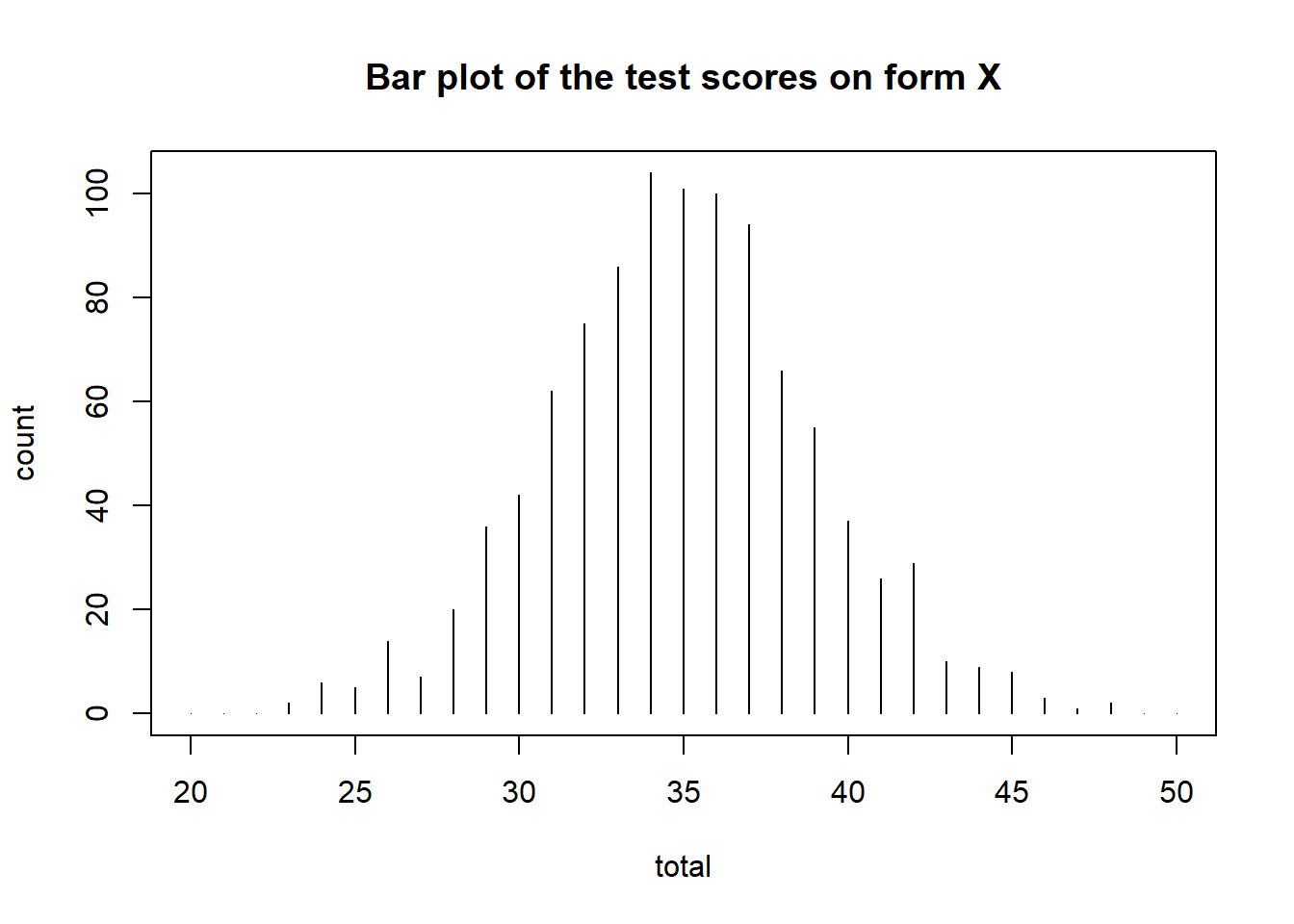

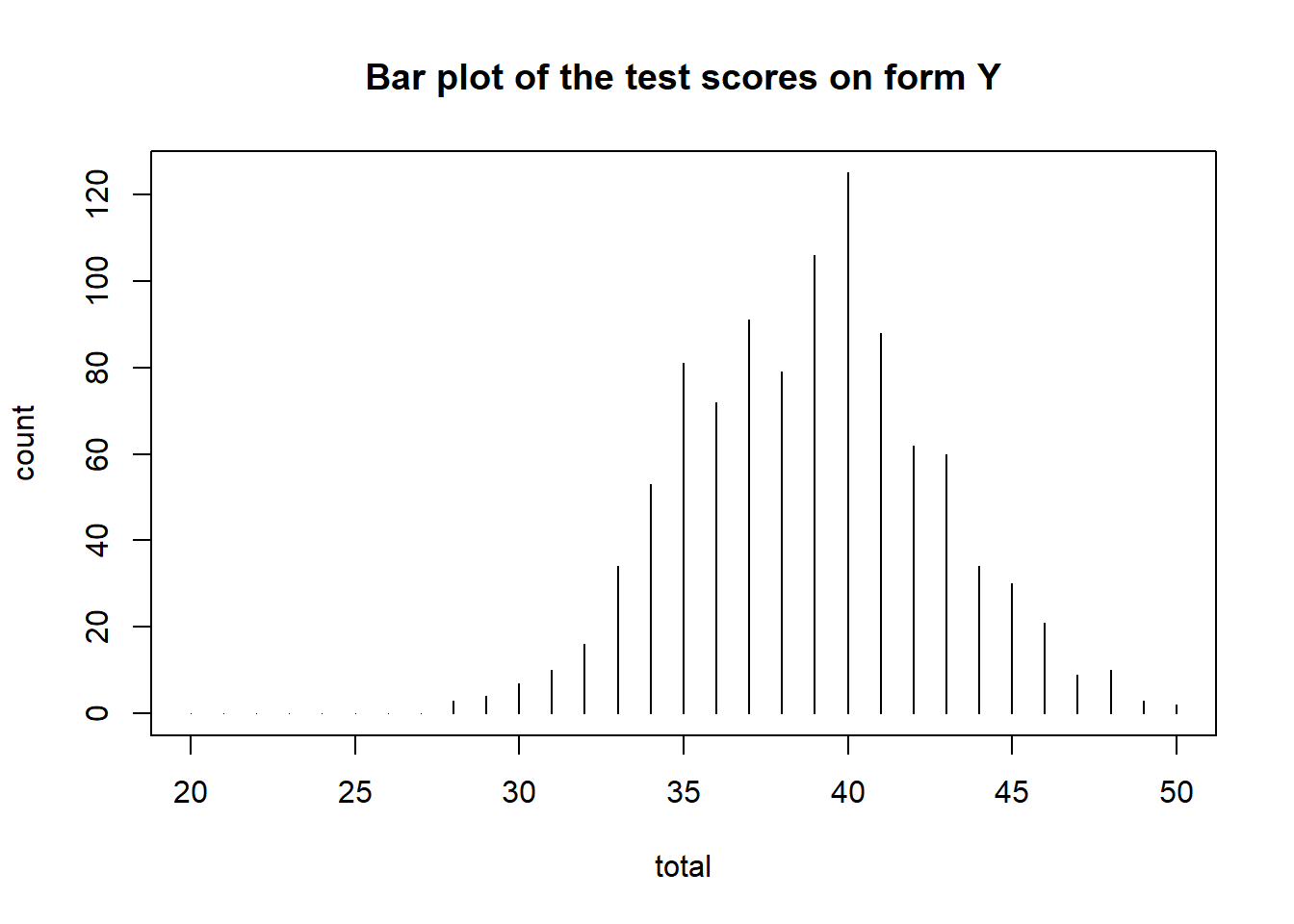

rbind(form_x = summary(data_x), form_y = summary(data_y))## mean sd skew kurt min max n

## form_x 34.925 4.098069 0.04373977 3.169021 23 48 1000

## form_y 38.840 3.815883 0.05785096 2.870284 28 50 1000We can also visualize the test score distributions.

plot(data_x, main = "Bar plot of the test scores on form X")

plot(data_y, main = "Bar plot of the test scores on form Y")

Linear Equating Methods

The linear equating methods include identity (i.e., no equating), mean, linear, and general linear functions.

Mean Equating

This method adjusts the scores from the new form (X) using the mean difference between the two forms (X and Y) as a fixed constant.

mean_yx <- equate(data_x, data_y, type = "mean")

mean_yx##

## Mean Equating: data_x to data_y

##

## Design: equivalent groups

##

## Summary Statistics:

## mean sd skew kurt min max n

## x 34.92 4.10 0.04 3.17 23.00 48.00 1000

## y 38.84 3.82 0.06 2.87 28.00 50.00 1000

## yx 38.84 4.10 0.04 3.17 26.91 51.92 1000

##

## Coefficients:

## intercept slope cx cy sx sy

## 3.915 1.000 35.000 35.000 30.000 30.000We can also check out the concordance table to see how X scores changed after being equated to form Y. The concordance table indicates that an examinee who got a 20 on form X would be expected to get a 23.92 on form Y. That is, it is a simple transformation of form X + 3.915.

head(mean_yx$concordance)## scale yx

## 1 20 23.915

## 2 21 24.915

## 3 22 25.915

## 4 23 26.915

## 5 24 27.915

## 6 25 28.915We can merge the concordance table back to form X so that we can see both old and new scores together for each examinee.

# Save the concordance table

form_yx <- mean_yx$concordance

# Rename the first column to total

colnames(form_yx)[1] <- "total"

# Merge the concordance table to form x

data_xy <- merge(data_x, form_yx)

head(data_xy)## total count yx

## 1 20 0 23.915

## 2 21 0 24.915

## 3 22 0 25.915

## 4 23 2 26.915

## 5 24 6 27.915

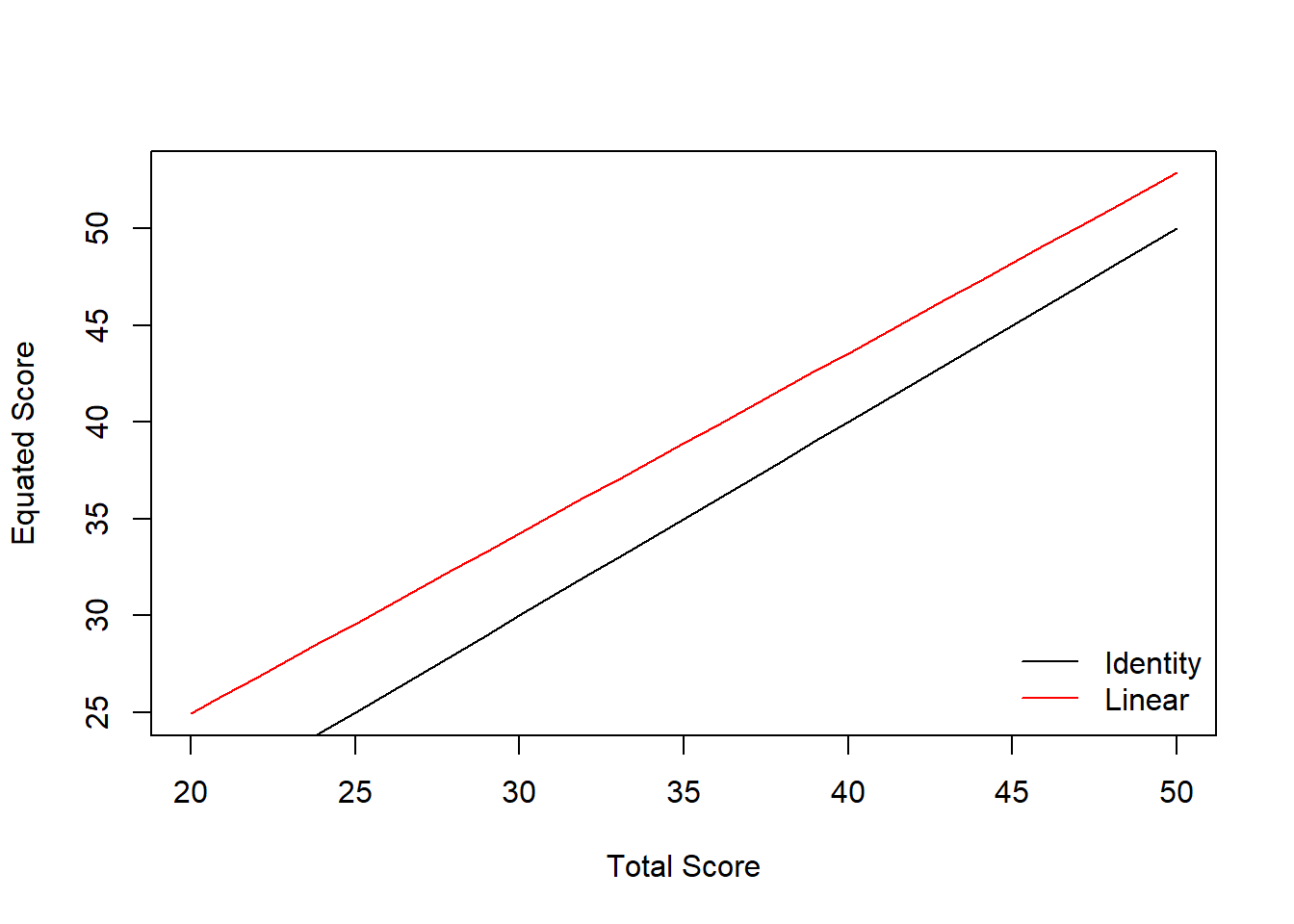

## 6 25 5 28.915Linear Equating

This method adjusts the scores from the new form (X) using both the mean and standard deviation differences between the two forms (X and Y) using a linear equation.

linear_yx <- equate(data_x, data_y, type = "linear")

linear_yx##

## Linear Equating: data_x to data_y

##

## Design: equivalent groups

##

## Summary Statistics:

## mean sd skew kurt min max n

## x 34.92 4.10 0.04 3.17 23.00 48.00 1000

## y 38.84 3.82 0.06 2.87 28.00 50.00 1000

## yx 38.84 3.82 0.04 3.17 27.74 51.01 1000

##

## Coefficients:

## intercept slope cx cy sx sy

## 6.3199 0.9311 35.0000 35.0000 30.0000 30.0000We can check out the equated scores against the original scores as follows:

plot(linear_yx)

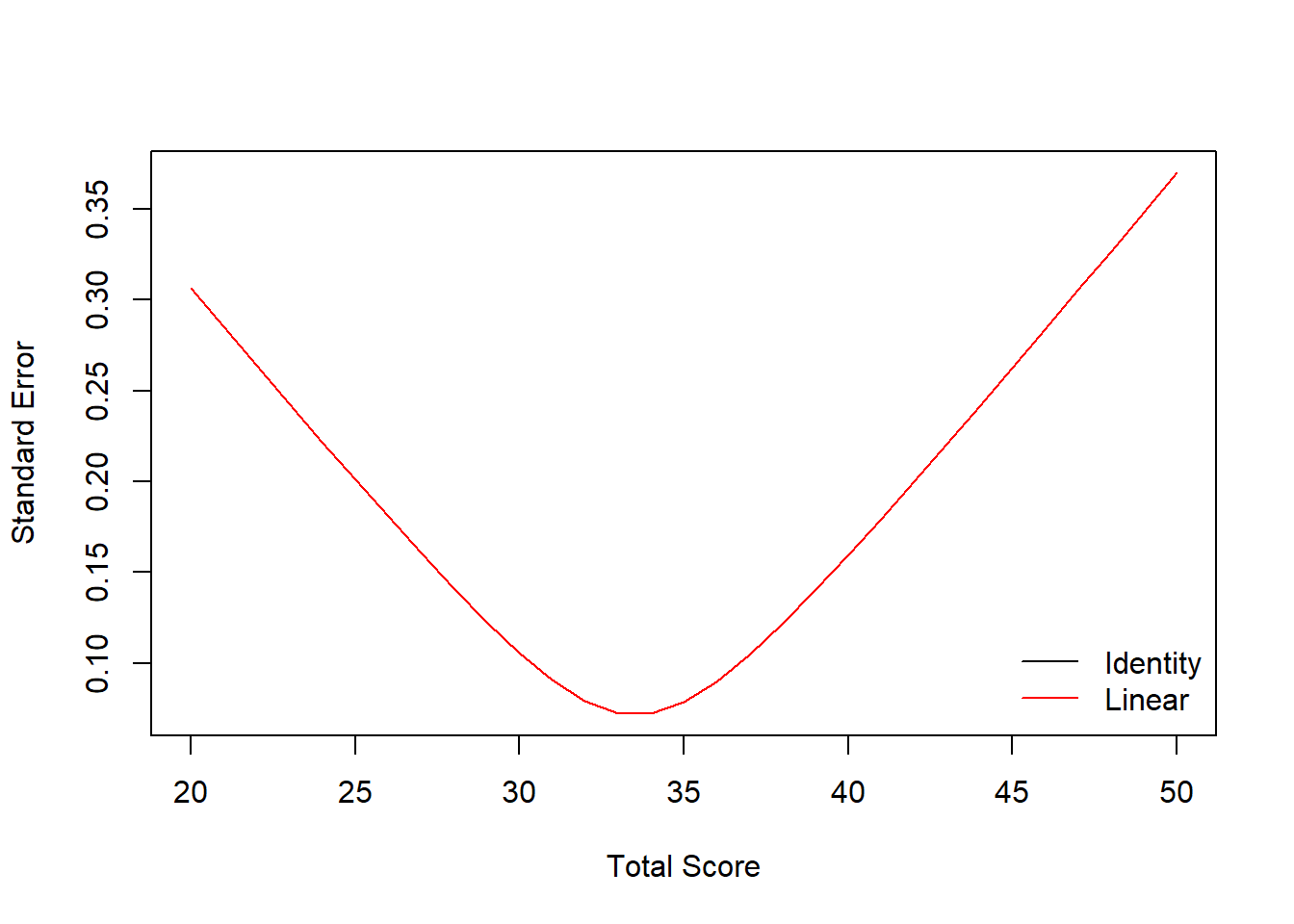

We can also run a bootstrap for the equating process and create a standard error plot:

linear_yx_boot <- equate(data_x, data_y, type = "linear", boot = TRUE, reps = 5)

plot(linear_yx_boot, out = "se")

Non-Linear Equating

The nonlinear equating methods include equipercentile, circle-arc, and composite functions.

Equipercentile Equating

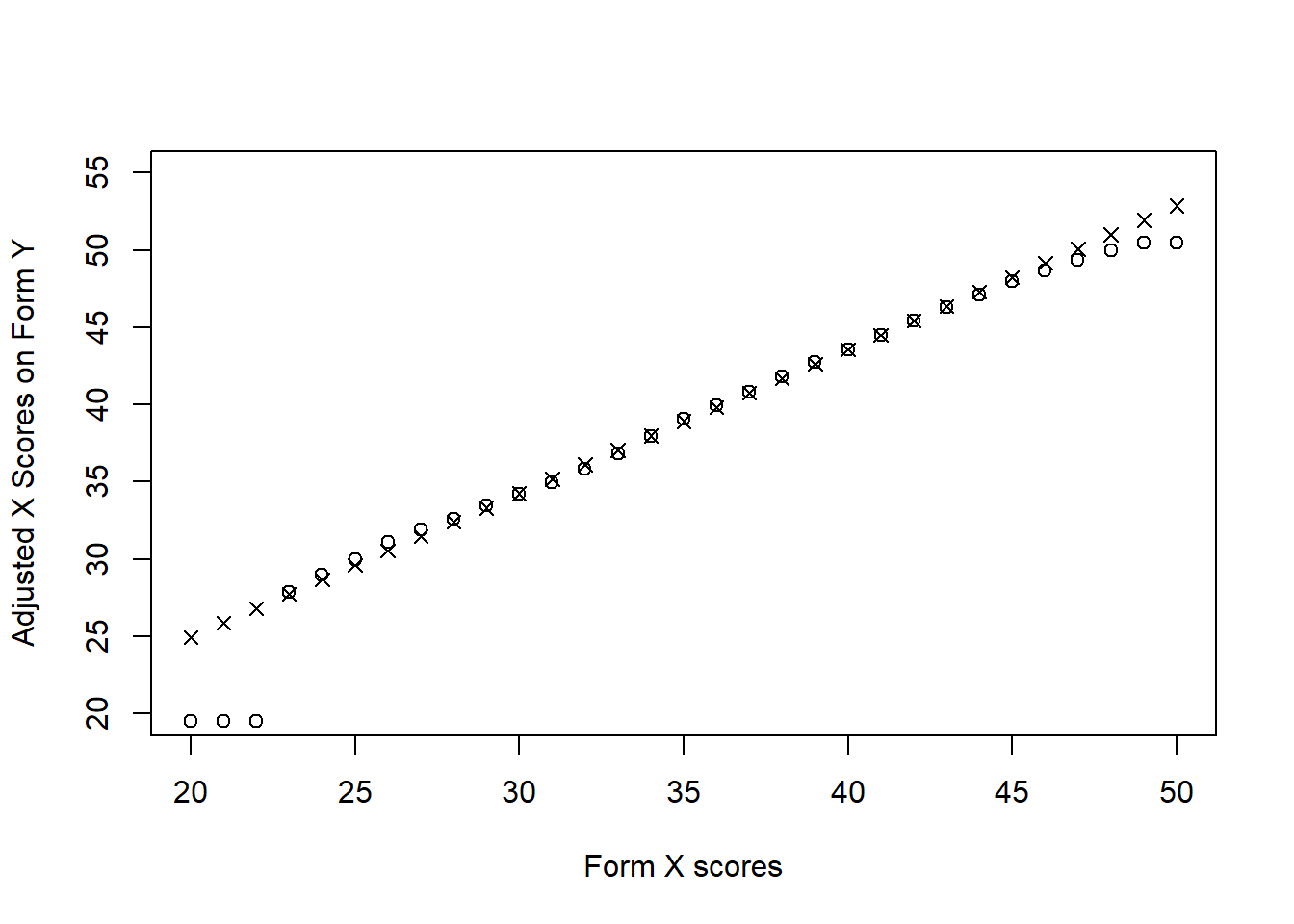

Equipercentile equating works by breaking up the scores on the X and Y forms into percentiles. Then percentiles of form X are matched to the percentiles on form Y.

equi_yx <- equate(data_x, data_y, type = "equipercentile")Now, let’s see how the X scores have been adjusted based on the percentile ranks of forms X and Y. The following figure shows that the biggest discrepancies between these two functions are at the tails of the distribution.

plot(equi_yx$concordance$yx ~ equi_yx$concordance$scale, type = "p", xlab = "Form X scores",

ylab = "Adjusted X Scores on Form Y", ylim = c(20, 55))

points(linear_yx$concordance$yx ~ linear_yx$concordance$scale, pch = 4)

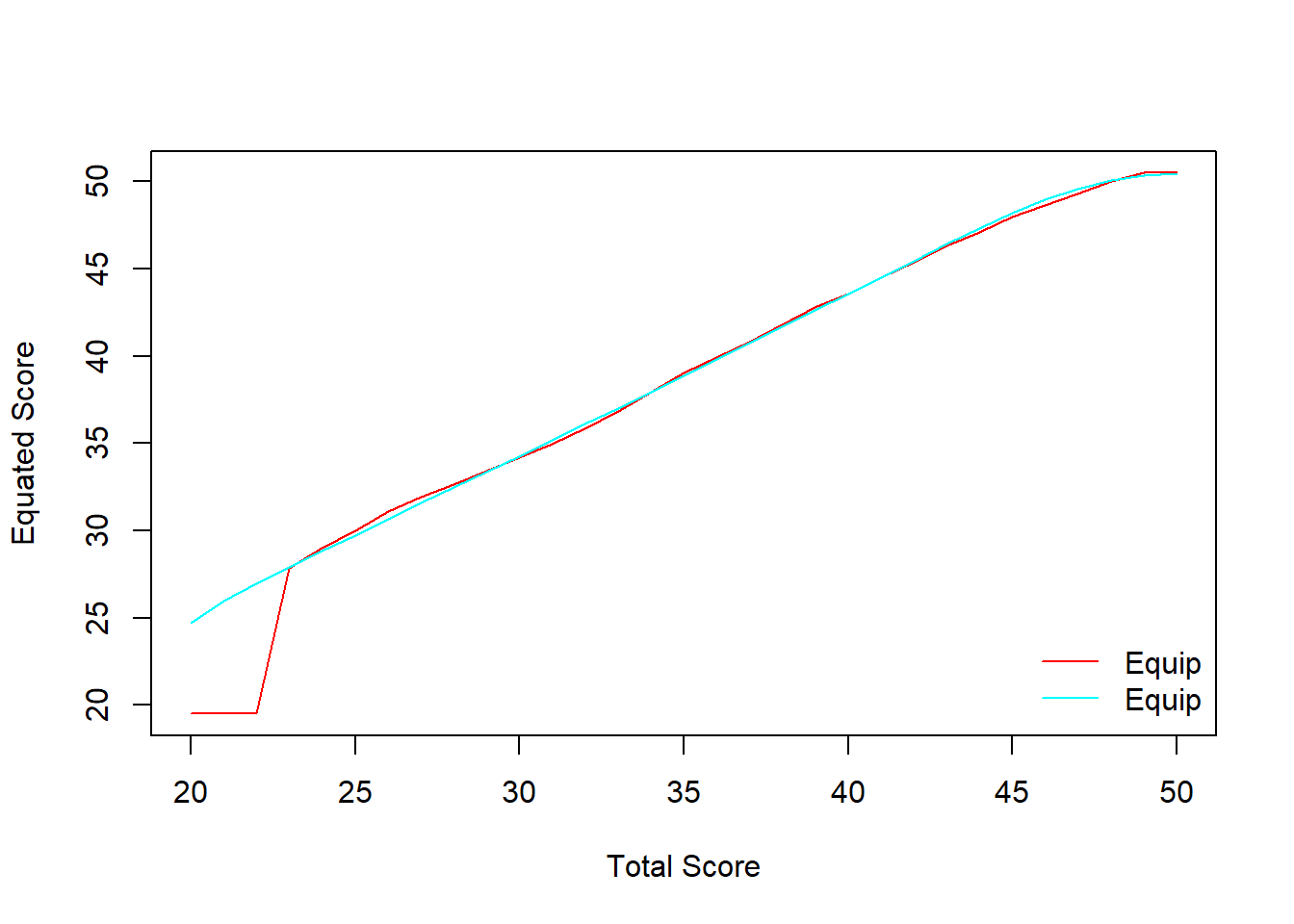

We can also add loglinear smoothing into the process and compare it against the regular equipercentile. The smooth line indicates the equipercentile with loglinear smoothing whereas the straight line (with a breaking point) indicates the regular equipercentile.

equismooth_yx <- equate(data_x, data_y, type = "equipercentile", smooth = "loglin", degree = 3)

# Compare equating functions

plot(equi_yx, equismooth_yx, addident = FALSE)

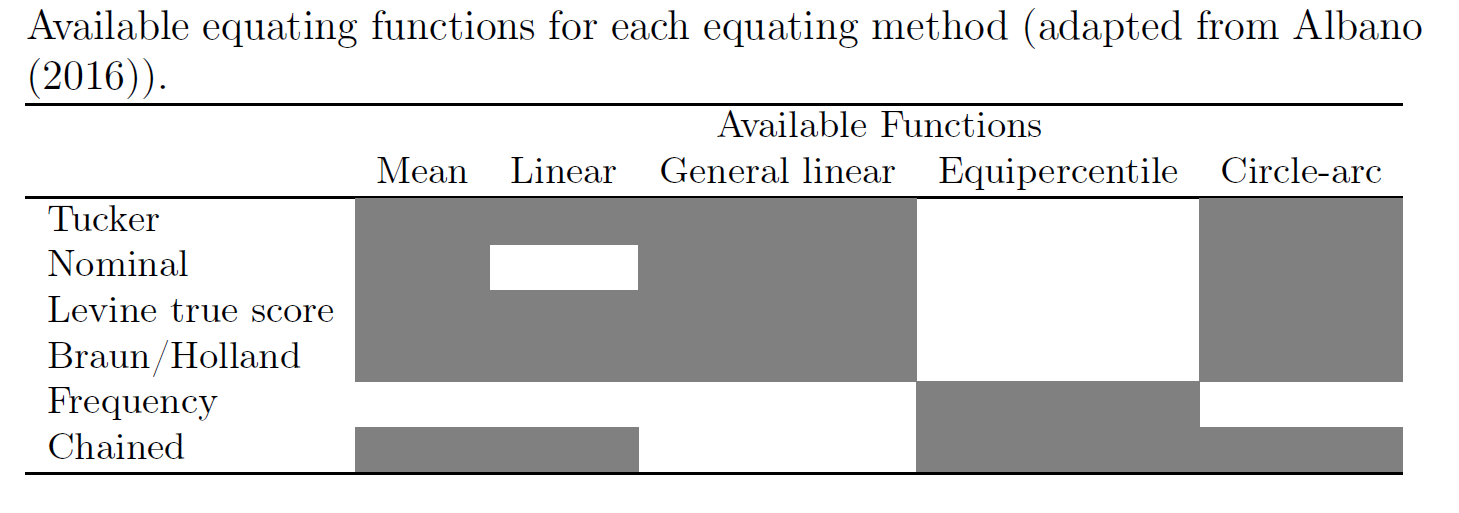

Nonequivalent Groups

In a nonequivalent group design, the population of examinees taking the different forms cannot be assumed to be from the same population. Therefore, potential differences in their abilities on the construct(s) must be accounted for. When a nonequivalent group design is used, a particular equating method must be specified. Generally, these methods work by relating the total scores on form X and form Y through the scores on a common set of items appearing on both forms (i.e., the common anchor scores) and the creation of a weighted, synthetic population (see Albano (2016) for more information on these methods). The equating methods available in the equate package are:

- Tucker

- Nominal

- Levine true score

- Braun/Holland

- Frequency

- Chained

The following table shows the combinations of these methods with the other equating types that we have seen so far:

Example Setup

The negd data set contains data on 2,000 examinees responding to 35 questions on a particular form of the test (either form X or form Y). Questions 1 through 25, labeled q.1, …, q.25, are unique to each form, while questions 26 through 35, labeled a.1, …, a.10, are the common anchor items.

negd <- read.csv("negd.csv", header = TRUE)

head(negd)## q.1 q.2 q.3 q.4 q.5 q.6 q.7 q.8 q.9 q.10 q.11 q.12 q.13 q.14 q.15 q.16 q.17

## 1 1 0 0 0 1 0 1 0 0 0 1 0 0 1 1 1 1

## 2 0 0 1 1 1 0 0 0 1 0 1 1 1 0 0 0 0

## 3 1 0 1 1 1 1 1 1 1 0 0 1 1 1 0 0 0

## 4 0 1 1 0 0 0 0 1 0 0 0 0 1 1 0 1 0

## 5 0 0 1 0 0 1 0 1 0 1 0 0 1 0 0 0 1

## 6 0 0 1 1 1 0 1 0 0 0 1 1 1 1 0 1 1

## q.18 q.19 q.20 q.21 q.22 q.23 q.24 q.25 a.1 a.2 a.3 a.4 a.5 a.6 a.7 a.8 a.9

## 1 0 0 0 1 1 0 1 0 0 0 0 0 0 0 0 1 0

## 2 0 1 0 0 1 0 1 1 1 1 0 0 1 0 1 0 1

## 3 0 0 0 1 1 0 0 0 0 1 1 1 1 0 1 0 0

## 4 0 0 1 1 1 0 1 0 0 1 1 1 0 1 1 1 1

## 5 0 0 1 0 1 0 1 1 0 0 1 1 0 1 0 0 0

## 6 1 1 0 1 1 1 0 1 0 1 1 1 1 1 1 0 1

## a.10 form

## 1 0 x

## 2 0 x

## 3 1 x

## 4 0 x

## 5 1 x

## 6 1 xBecause the data are in a person-by-item format, for each person, we need to calculate their total score on the form, excluding the common anchor items, then their score on the common anchor items. Once this is done, frequency tables can be created, plotted, and then passed onto the equate function.

# Calculate total scores based on unique items

negd$total <- rowSums(negd[, 1:25])

# Calculate scores based on anchor items

negd$anchor <- rowSums(negd[, 26:35])

# Create frequency tables (total score range: 0-25; anchor score range: 0-10)

negd_x <- freqtab(negd[1:1000, c("total", "anchor")], scales = list(0:25, 0:10))

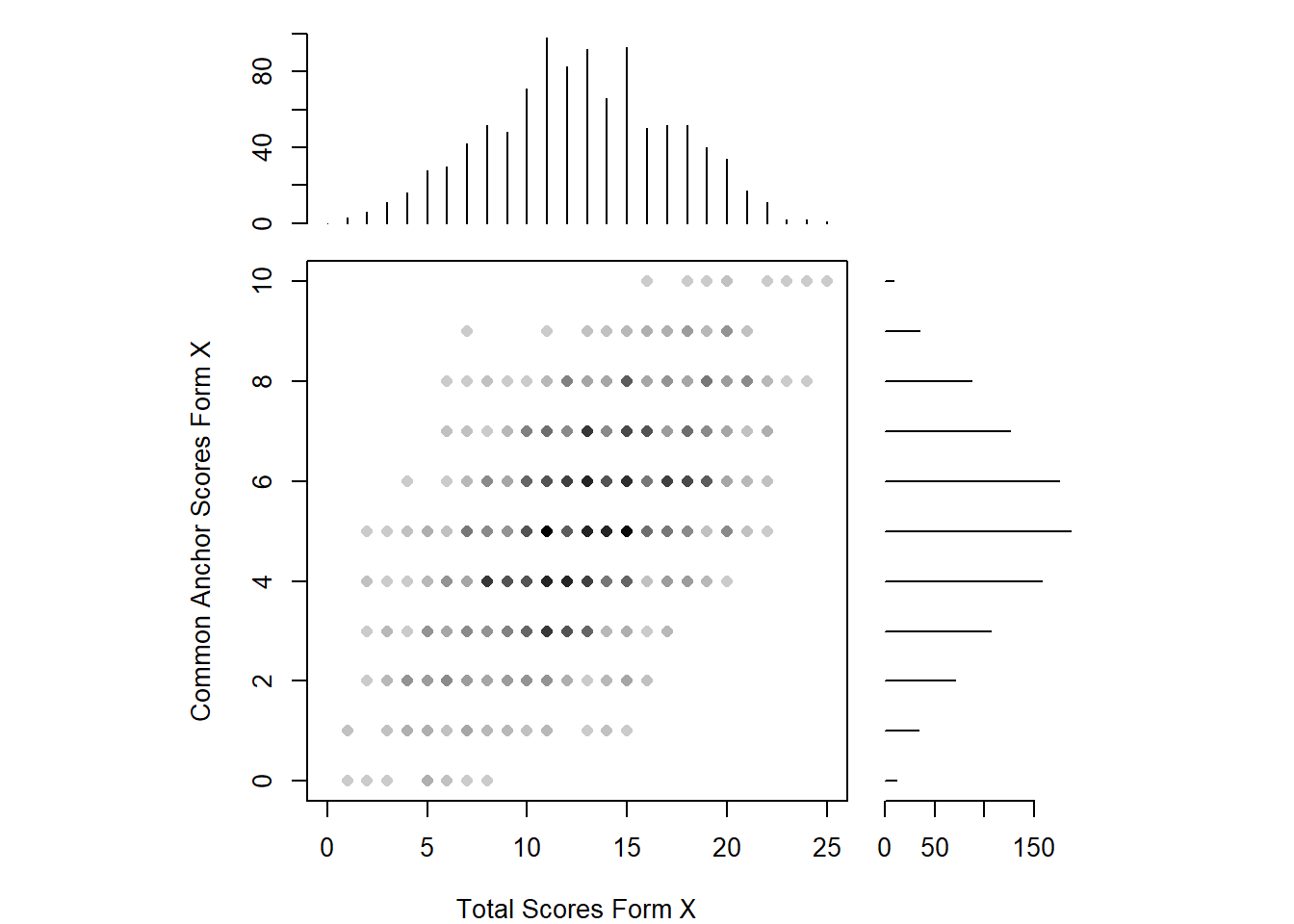

negd_y <- freqtab(negd[1001:2000, c("total", "anchor")], scales = list(0:25, 0:10))Now we will see a scatterplot of the common anchor scores on form X against the total scores on form X. Plotted along the axes are the marginal distributions of these scores.

plot(negd_x, xlab = "Total Scores Form X", ylab = "Common Anchor Scores Form X")

The two distributions are roughly normal, with some irregularities for the total scores for form X (note the presence of various peaks near the center of this distribution). Because there are irregularities, assumed to arise from measurement error, presmoothing could be performed. There are a variety of presmoothing options available in equate. One option involves fitting a log linear model. Log linear presmoothing can be specified for forms X and Y as follows:

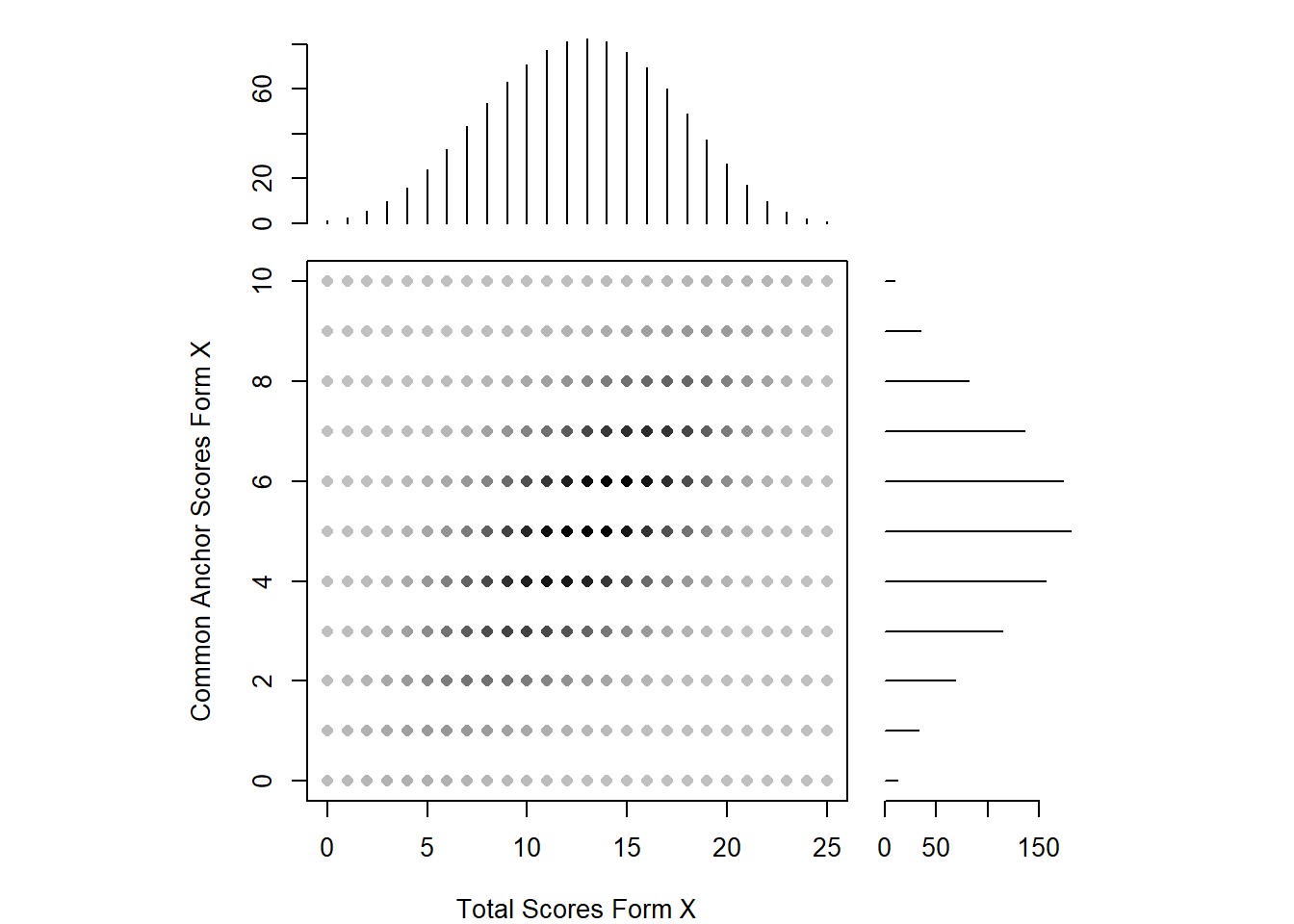

smooth_x <- presmoothing(negd_x, smoothmethod = "loglinear")

smooth_y <- presmoothing(negd_y, smoothmethod = "loglinear")Now, let’s check out the plot again.

plot(smooth_x, xlab = "Total Scores Form X", ylab = "Common Anchor Scores Form X")

Linear Tucker Equating

Now we will use linear equating based on the Tucker method.

negd_tucker <- equate(negd_x, negd_y, type = "linear", method = "tucker")

negd_tucker$concordance## scale yx se.n se.g

## 1 0 4.439083 0.3480743 0.3400317

## 2 1 5.220574 0.3261043 0.3197119

## 3 2 6.002065 0.3044597 0.2996877

## 4 3 6.783557 0.2832149 0.2800225

## 5 4 7.565048 0.2624671 0.2607975

## 6 5 8.346539 0.2423440 0.2421177

## 7 6 9.128031 0.2230147 0.2241194

## 8 7 9.909522 0.2047041 0.2069804

## 9 8 10.691013 0.1877108 0.1909323

## 10 9 11.472505 0.1724244 0.1762733

## 11 10 12.253996 0.1593373 0.1633778

## 12 11 13.035487 0.1490297 0.1526932

## 13 12 13.816978 0.1421079 0.1447100

## 14 13 14.598470 0.1390783 0.1398916

## 15 14 15.379961 0.1401934 0.1385683

## 16 15 16.161452 0.1453580 0.1408389

## 17 16 16.942944 0.1541655 0.1465363

## 18 17 17.724435 0.1660372 0.1552838

## 19 18 18.505926 0.1803692 0.1666016

## 20 19 19.287418 0.1966241 0.1800056

## 21 20 20.068909 0.2143650 0.1950662

## 22 21 20.850400 0.2332530 0.2114297

## 23 22 21.631892 0.2530314 0.2288166

## 24 23 22.413383 0.2735071 0.2470111

## 25 24 23.194874 0.2945347 0.2658473

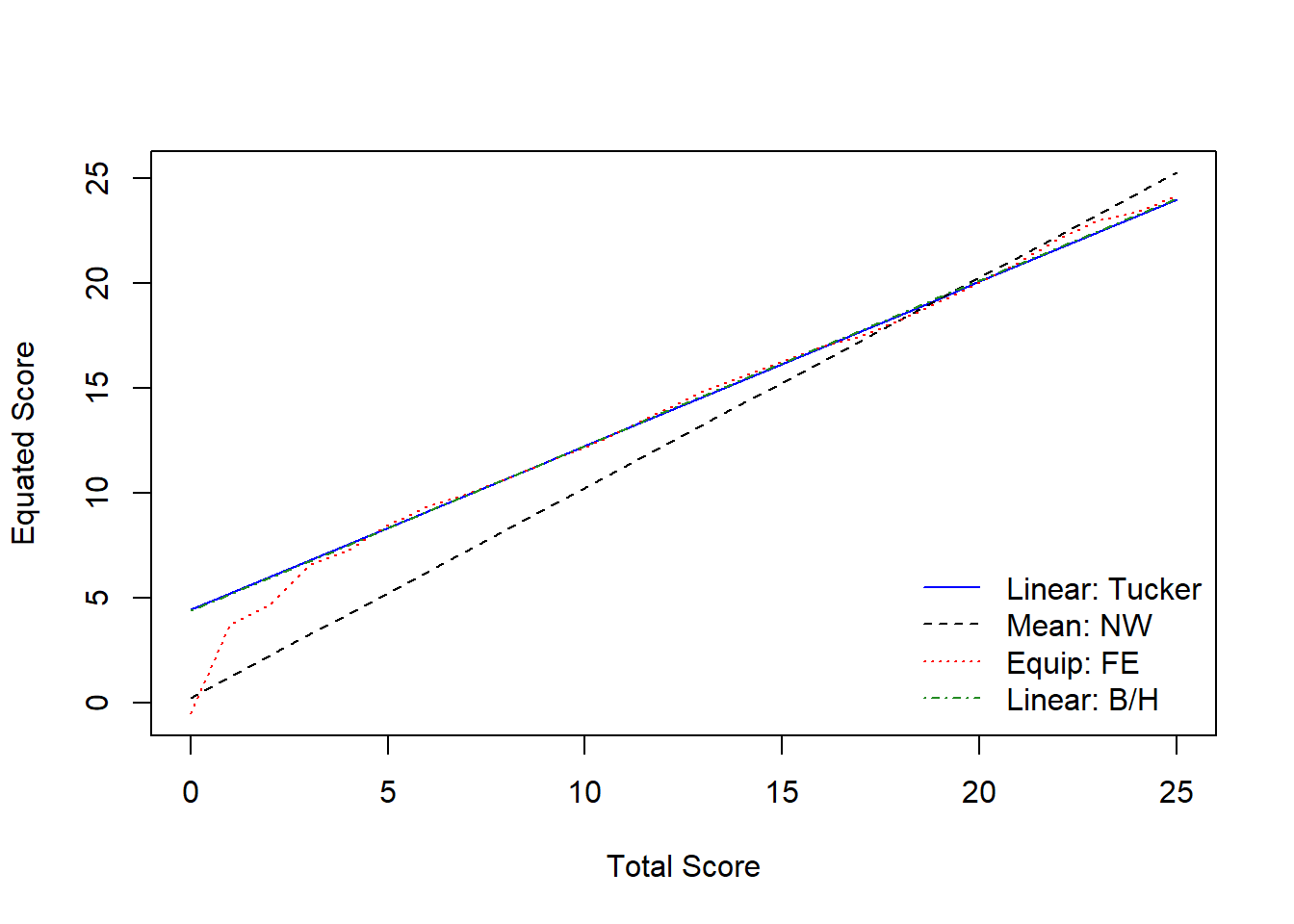

## 26 25 23.976366 0.3160041 0.2851982Comparing Multiple Methods

# Nominal method with mean equating

negd_nom <- equate(negd_x, negd_y, type = "mean", method = "nom")

# Frequency method with equipercentile

negd_freq <- equate(negd_x, negd_y, type = "equip", method = "freq")

# Braun method with linear equating

negd_braun <- equate(negd_x, negd_y, type = "linear", method = "braun")

# Compare equated scores

round(cbind(xscale = 0:25,

nominal = negd_nom$concordance$yx,

tucker = negd_tucker$concordance$yx,

freq = negd_freq$concordance$yx,

braun = negd_braun$concordance$yx), 2)## xscale nominal tucker freq braun

## [1,] 0 0.25 4.44 -0.50 4.41

## [2,] 1 1.25 5.22 3.76 5.20

## [3,] 2 2.25 6.00 4.69 5.98

## [4,] 3 3.25 6.78 6.56 6.77

## [5,] 4 4.25 7.57 7.29 7.55

## [6,] 5 5.25 8.35 8.51 8.34

## [7,] 6 6.25 9.13 9.35 9.12

## [8,] 7 7.25 9.91 9.98 9.91

## [9,] 8 8.25 10.69 10.72 10.70

## [10,] 9 9.25 11.47 11.49 11.48

## [11,] 10 10.25 12.25 12.18 12.27

## [12,] 11 11.25 13.04 13.03 13.05

## [13,] 12 12.25 13.82 13.94 13.84

## [14,] 13 13.25 14.60 14.84 14.62

## [15,] 14 14.25 15.38 15.57 15.41

## [16,] 15 15.25 16.16 16.31 16.19

## [17,] 16 16.25 16.94 16.98 16.98

## [18,] 17 17.25 17.72 17.47 17.77

## [19,] 18 18.25 18.51 18.24 18.55

## [20,] 19 19.25 19.29 19.12 19.34

## [21,] 20 20.25 20.07 20.05 20.12

## [22,] 21 21.25 20.85 21.03 20.91

## [23,] 22 22.25 21.63 22.09 21.69

## [24,] 23 23.25 22.41 23.00 22.48

## [25,] 24 24.25 23.19 23.39 23.26

## [26,] 25 25.25 23.98 24.16 24.05# Plot the results

plot(negd_tucker, negd_nom, negd_freq, negd_braun, lty=c(1,2,3,4),

col=c("blue", "black", "red", "forestgreen"), addident = FALSE)